In the realm of machine learning, accuracy is often celebrated as the ultimate goal. Teams invest months in tuning parameters, engineering features, and achieving that coveted high validation score. Yet, the moment a model is deployed, reality begins to shift. Customer behaviour evolves, market conditions fluctuate, and external variables mutate in unpredictable ways. Over time, this leads to two silent yet inevitable phenomena — data drift and model decay.

These issues are rarely as dramatic as a system crash. They creep in quietly, eroding a model’s performance day by day until predictions once trusted begin to mislead. Despite the vast attention on model creation, it is in the post-deployment phase — the monitoring, updating, and recalibrating — that long-term success or failure is truly determined.

Understanding Data Drift

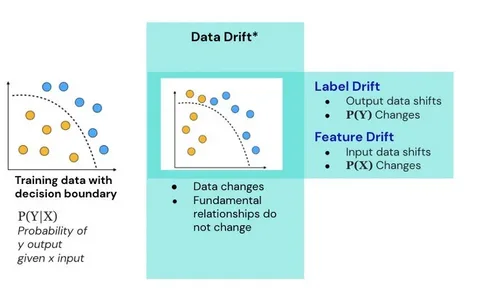

Data drift refers to the gradual change in the statistical nature of input variables, which causes a model’s assumptions and performance to deteriorate over time. It’s the equivalent of trying to navigate a city using a map that’s slowly becoming outdated.

For example, consider a credit scoring model trained on customer data before a major economic downturn. The model may have learned that stable employment and consistent credit card use indicate low risk. But when layoffs increase and financial behaviour shifts, the underlying assumptions no longer hold. The inputs remain valid — but their meanings change.

Drift can manifest in several ways — covariate drift changes the characteristics of input data, prior probability drift alters class ratios, and concept drift redefines how inputs influence outputs. Detecting and addressing these subtle transitions requires ongoing statistical vigilance and the use of automated monitoring systems.

The Reality of Model Decay

Model decay, often referred to as model degradation, is the gradual decline in a model’s predictive performance resulting from the combined effects of data drift, shifts in user behaviour, and evolving environments. It’s less about data changing and more about the model itself growing stale.

In retail, for instance, recommendation systems trained on pre-pandemic consumer habits quickly lost relevance when shopping moved online. Similarly, fraud detection models degrade as cybercriminals constantly adapt their strategies. Even language models experience decay — phrases, slang, and public sentiment evolve faster than any static model can keep up with.

What makes model decay dangerous is its subtlety. Accuracy metrics might remain superficially stable for months, masking deeper misalignments. This false sense of confidence often leads businesses to make high-stakes decisions based on outdated intelligence.

Why Static Pipelines Are the Weak Link

Many organisations still rely on rigid, one-time deployment pipelines. Once a model goes live, it’s often left unmonitored, treated as a “finished product” rather than a dynamic system. These static pipelines may have worked when environments were relatively stable, but in today’s data-rich, real-time world, they are a liability.

The absence of continuous feedback mechanisms means performance drifts go unnoticed until users or stakeholders point out anomalies. By that stage, reputational and financial costs can already be significant. Automated retraining, continuous validation, and performance alerting should be integral parts of every production system — not afterthoughts.

For aspiring professionals seeking to master this lifecycle approach, advanced learning through a data science course in Bangalore can provide valuable exposure to MLOps practices, data monitoring techniques, and the utilisation of automated model management tools such as MLflow or Kubeflow. These frameworks are designed to ensure that models evolve in tandem with the data they depend on.

The Economic Impact of Neglected Drift

The consequences of ignoring data drift extend far beyond technical inconvenience. Businesses suffer real economic losses when their models become increasingly irrelevant.

In the financial sector, outdated models can misclassify loan applicants, leading to higher default rates or lost revenue from rejected but creditworthy candidates. In e-commerce, a decaying recommendation engine may reduce click-through rates, eroding both sales and customer engagement. Healthcare models trained on old patient data risk providing inaccurate diagnoses or treatment suggestions, potentially endangering lives.

Industry research indicates that undetected model decay can result in a 20–30% drop in performance metrics within a year of deployment. The cost of rebuilding trust and recalibrating systems later is often significantly higher than the cost of proactive maintenance.

Strategies to Combat Drift and Decay

The first step in combating drift is continuous monitoring. Automated systems should track input distributions, output confidence, and prediction errors over time. Techniques such as the Kolmogorov–Smirnov test or Population Stability Index (PSI) can help quantify drift between the training and live data distributions.

Secondly, feedback loops should be established. Real-world outcomes must feed back into the system to refine future predictions and improve accuracy. This is particularly vital in dynamic fields such as fraud detection or stock forecasting, where rapid learning from recent patterns is crucial.

Another promising approach is online learning, where models are updated incrementally with each new data batch, rather than being retrained from scratch. This ensures adaptability without high computational costs. In some cases, ensembles of models can be rotated or weighted based on performance, maintaining diversity and robustness.

Lastly, cross-functional collaboration is key. Data scientists, engineers, and business leaders must jointly define performance thresholds, monitoring frequency, and retraining triggers. Model management isn’t just a technical exercise — it’s a business imperative.

The Role of Explainability and Ethics

When models evolve, so must our understanding of their behaviour. Explainable AI (XAI) tools are essential for identifying and diagnosing drift-related issues. By revealing which features are influencing predictions, these tools help analysts identify when and why a model’s logic begins to diverge from its intended behaviour.

Moreover, ethical oversight must remain a core principle. A decaying model can inadvertently introduce or amplify bias, particularly when demographic or behavioural patterns shift. Regular fairness audits ensure that evolving models continue to make equitable predictions across user groups.

For those pursuing a data science course in Bangalore, learning how to integrate ethical governance into MLOps pipelines is fast becoming as important as mastering algorithms themselves.

Conclusion

The silent erosion of accuracy caused by data drift and model decay is one of the most underestimated risks in modern machine learning. Static pipelines may deliver impressive results initially, but without adaptive feedback, they become relics in a constantly shifting landscape.

The future belongs to systems that can sense, learn, and adjust in real time — transforming machine learning from a one-time achievement into a living, breathing process. By treating model maintenance with the same seriousness as model building, organisations can protect not only performance but also trust. In the long run, the true cost of inaction is far greater than the investment required to keep models — and the decisions they inform — alive and relevant.